Insyte and Resources

How can you solve your data quality areas of pain using the international data quality standard, ISO 8000?

Part 5

Cataloguing at Source

Abstract: This series of articles taken together explain how ISO 8000 can be part of your digital strategy. This International Standard can help to increase productivity in your organization, and cut the cost of a data cleansing or data onboarding projects. In the final article in this series, we explain how cataloguing at source can simultaneously cut costs and increase the quality output of your data cleansing or data onboarding project.

As an end-user, how can cataloguing at source help me improve the quality of my master data records?

A common feature of capital projects is the failure of purchasing teams to specify data quality and data exchange standards into the contracts with their suppliers. Purchasing departments are very good at focusing on price, but often neglect to consider the internal costs of not requiring the supplier to supply data that does not have to be significantly reworked before that data is loaded into the Enterprise Resource Planning (ERP) system.

The cost of the rework, either by internal resource or by using an external supplier, is significant and in most cases avoidable. Rework of data, especially by third parties, significantly increases the chances of quality being compromised. When no data requirement is demanded of certain document types, such as SPIR documents, then the standard of the data received is very poor. SPIR documents typically describe a spare part using just a noun, and perhaps one property. The intention is to force the end-user back to the original equipment supplier (OEM) to buy the replacement parts, they even helpfully contain columns for you to fill in the quantities!

In this example, from a single 62-line SPIR document, O-Ring is described four different ways. The shore hardness is also missing from the details, making it impossible to safely order the part from another supplier.

Digital data, such as data that complies with ISO 8000 has the ability to transform the current methods of exchanging data between organizations. ISO 8000 compliant data is by definition computer-interpretable,portable between systems, and data referenced from a concept dictionary is exchangeable without loss of meaning including those terms in multiple languages, along with semantically equivalent terms in the same language. We discussed some of these examples in part – 1 of this series. ISO 8000 compliant data can be considered to enable the W3C vision of the semantic web.

Purchasing teams can help the other parts of their organization by adding a simple clause to the purchase orders asking for delivery of an ISO 8000-115 identifier that resolves to an ISO 8000-110 record for the items or services that will need to be created as master data records in their ERP system:

“The supplier shall supply technical data for the products or services they supply. Each item shall contain an ISO 8000-115 compliant identifier that is resolvable to an ISO 8000-110 compliant record with free decoding of unambiguous, internationally recognized identifiers.”

So why is this so important? Free decoding ensures that everyone in the data supply chain can resolve the data without loss of meaning. ISO 8000 compliant data enables a federated approach to exchanging data. Items of production created by the manufacturer in this way can be hosted in a suitable cloud environment to be shared with everyone in the supply chain throughout the life of the product, and the life of the asset. In capital projects the data handover is normally timed as the operations start,and this is a major issue for owner/operators, requiring considerable resource into an artificially reduced time period. If that data was available as ISO 8000 compliant data in such a federated cloud it could be accessed at an earlier stage in the project, prepared for loading, and verified using the identifier on the “as-built” documents. As the data is provided directly by the manufacturer of the item, fewer quality checks are required, and the cost of the process is considerably reduced, and the quality assured.

The international standard ISO 8000-115 introduced in early 2018, makes a significant contribution to enabling more efficient data exchange. Whilst identifiers are initially designed to be used internally within a system that belongs to an organization, frequently those organizations exchange identifiers with external parties. These identifiers become aliases for data that is controlled and managed by the owner of the identifier.

Verifying and validating the quality of master data depends on an ability to identify the owner of data and any use restrictions on that data. This verification and validation also requires an ability to resolve identifiers to the data sets that they identify. By definition, ISO 8000-115 identifiers resolve to an ISO 8000-110 compliant data set.

Manufacturers own the data for the products they produce. In any data exchange, they are the suppliers of the data, in the same way that they are the suppliers of the product. It has not always been apparent to manufacturers the crucial role they play in the data exchange supply chain finishing with end-users.

A reluctance to exchange data with users of their products has resulted in the current situation whereby all parties agree that the quality of data in the marketplace is not fit for purpose. There is often also a disconnect between the “single version of the truth” held by the marketing department for publication in catalogues, and the “single version of the truth” required by the technical sales teams of manufacturers who require the specifications of all the variants that are not available in catalogues.

This disconnect is one of the causes of the problems with third party data cleaners, who do not recognize the variants in the data sets they are looking, because these part numbers do match the data found in catalogues.

There have been studies in different sectors, particularly the electrical distribution sector, where trials have taken place with select groups of manufacturers and distributers to directly exchange product data, and the results have been conclusive in reducing the cost of incorrect orders.

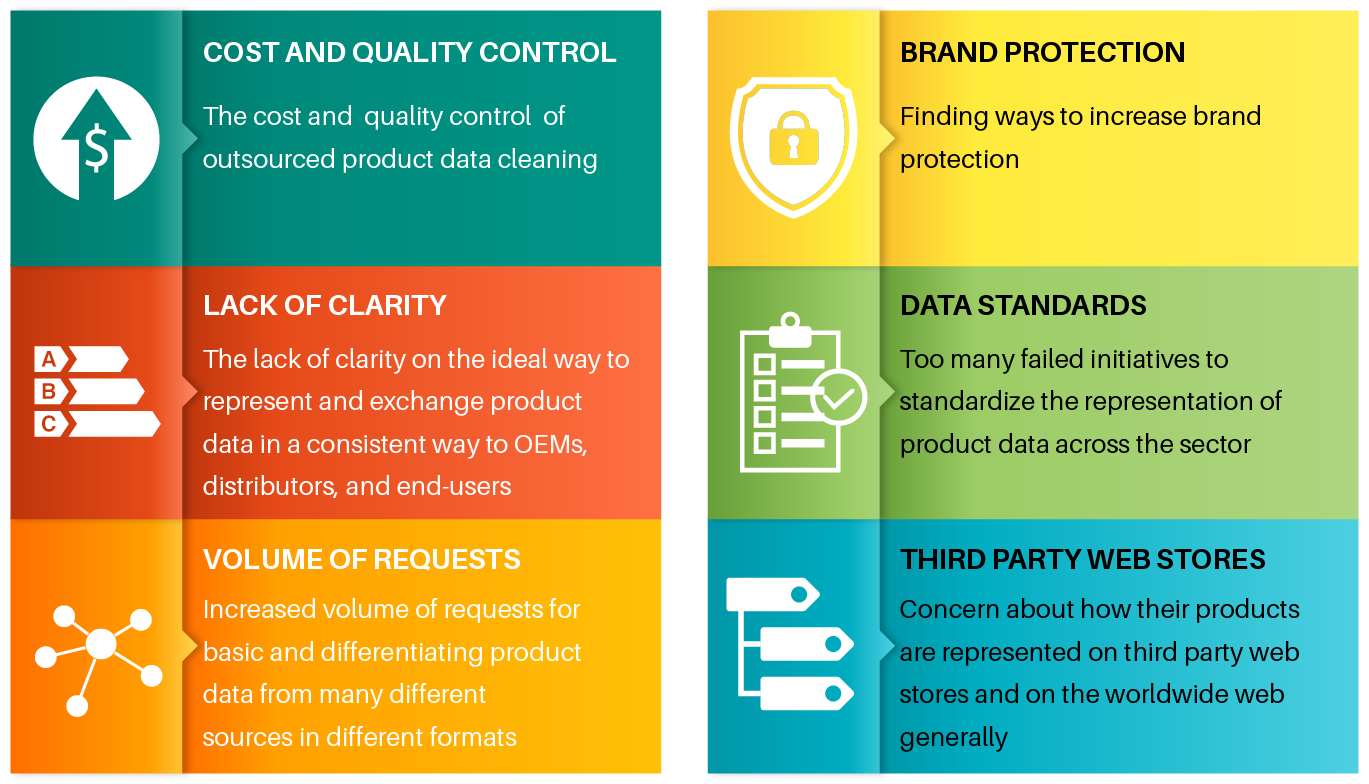

Extensive discussions with manufacturers in the bearing and power transmission sector revealed that they are now seeing that they have a responsibility to deal with the issue. Manufacturers current concerns can be broken down into six areas.

What is clear from the discussion of these issues with both the suppliers of the data and the consumers of the data, is that there is a clear requirement for a federated platform for exchanging data. A platform where the manufacturers post their product specifications in a standard, exchangeable format. The consumers of that data, be that distributors or end-users, can access that data and import it simply into their system, safe in the knowledge that the data has the provenance of the manufacturer of the product.

The issues of data quality in end-user systems are frequently caused because of a lack of quality data that lacks the provenance of the manufacturer of the product. Such a platform would deliver tangible and valuable benefits for the whole supply chain for the life-cycle of the product but also for the life-cycle of the equipment that the product is part of. As early failures to establish this type of platform has proved, the only way such a platform could operate is if it adopted international data quality and data exchange standards.

International standards are a consensus of best practice. There are a number of issues that previous initiatives have failed to overcome, which are common to the challenges facing those parties trying to promote interoperability. These challenges include common terminology and multiple languages, both of which are dealt with in the ISO 8000 data quality standard when combined with a concept dictionary as discussed in part – 1 of this series.

New parts of the standard, ISO 8000-115, and ISO 8000-116, will also help in the fight against counterfeiting, by establishing authoritative identifiers for the supply chain. The work of the previous initiatives is not in vain. Taking these initiatives and applying the ISO 8000 data standard, and specifically the ISO 8000-110 data exchange methodology will allow all the positive aspects of the previous work to be incorporated into an exchange mechanism that works for all parties.

There are numerous studies examining the cost of poor quality data to organizations. Harvard Business Review put the cost at $3 trillion per year. Rule number 1 of any quality system is to get quality right first time. It has been claimed that the impact of bad data amplifies ten-fold and manifests as the 1-10-100 rule: if the cost to fix a data error at the time of entry is $1, the cost to fix it an hour after it’s been entered escalates to $10. Fix it later, and the cost becomes $100. In terms of productivity losses, the amount of time that knowledge workers waste in hidden data factories, hunting for data, finding and correcting errors, and searching for confirmatory sources for data they do not trust is estimated at 50%. These figures, of course, do not relate solely to materials data, but they are indicative of the wider issues caused by poor data quality.

In the book, Shredded: Inside RBS, The Bank That Broke Britain, by Ian Fraser, it is revealed that 1,000 of the 10,000 back office staff spent their working lives doing nothing other than data clean-up and reconciliation. This may, or may not, be indicative of the issues in the financial sector, however, the introduction of ISO 8000-115, and ISO 8000-116 the new standards for identifiers are a response in part to the problems that sector has with trade reconciliation.

Costs vary across organizations and sectors, but a speaker from a global super-major oil and gas company stated at a conference more than ten years ago, that it cost them $250 to create a master data record after accounting for all the internal and external costs. You should consider how much it costs you to correct data, and the time wasted in your organization trying to find items that are not described correctly. In most cases the wasted costs far outweigh the costs of implementing the processes we have outlined in this series.

Digital data is the key to future system interoperability. In 2017 and 2018, five high profile examples of standards and initiatives, were drafted and published:

Digital Transformation Monitor Germany: Industrie 4.0 (i40) January 2017;

- New European Interoperability Framework Promoting seamless services and data flows for European public administrations NO-07-16-042-EN-N. 2017;

- ISO/IEC 30182:2017 Smart city concept model — Guidance for establishing a model for data interoperability;

- Mapping Underground Assets in the UK;

ISO/DTS 18101 – oil and gas interoperability

All the publications highlighted the barriers to interoperability and therefore the implementation of these standards and initiatives. These barriers can be summarised as follows, and all them have been covered in this series of articles:

- The use of open standards;

- The management of open data;

- The creation of a common (multilingual) terminology;

- The creation of a common taxonomy;

- The implementation of a common (international) data standard;

- The adoption of a standard open architecture including web APIs;

- The management of digital assets, including digital twins.

This is the final part of this five-part series. I hope you have enjoyed reading the articles, and more importantly, I hope the articles have helped to demystify the world of data standards and the part that ISO 8000 can play in helping you to increase productivity in your organization, and cut the cost of a data cleansing or data onboarding project. In part – 1 of the series, we explained the role that data dictionaries play in improving your data quality. In part – 2 , we explained the role of the data specification in improving data quality. In part – 3, we explained how to create a data specification in a way that ensures data quality is built into the final master data record. In part – 4, we explained how to create a catalogue item, and how to render short and long descriptions from that catalogue item. In this part we explained how cataloguing at source can simultaneously cut costs and increase the quality output of your data cleansing or data onboarding project.

To sum the five parts in this series, in order to improve the quality of your data:

- you shall use a data dictionary with an international registration data identifier (IRDI) for each data dictionary entry;

- your data specifications shall reference a data dictionary;

- your data specifications shall have appropriate data types with which to control the values;

- you must manage data from the “bottom up”, i.e., from the smallest meaningful elements, the property value and the unit of measure;

- specify that ISO 8000-110 compliant data is also delivered when purchasing products and services.

About the author

Peter Eales

Chief Executive MRO Insyte

Peter Eales is a subject matter expert on MRO (maintenance, repair, and operations) material management and industrial data quality. Peter is an experienced consultant, trainer, writer, and speaker on these subjects. Peter is recognised by BSI and ISO as an expert in the subject of industrial data. Peter is a member ISO/TC 184/SC 4/WG 13, the ISO standards development committee that develops standards for industrial data and industrial interfaces, ISO 8000, ISO 29002, and ISO 22745, and is also a committee member of ISO/TC 184/WG 6 that is developing the standard for Oil and Gas Interoperability, ISO 18101.

Peter has previously held positions as the global technical authority for materials management at a global EPC, and as the global subject matter expert for master data at a major oil and gas owner/operator. Peter is currently chief executive of MRO Insyte, and chairman of KOIOS Master Data.

Peter also acts as a consultant for ECCMA, and is a member of the examination board for the ECCMA ISO 8000 MDQM certification.

ECCMA is a membership organization and is the project leader for ISO 22745 and ISO 8000 KOIOS Master Data is a world leading cloud MDM solution enabling ISO 8000 compliant data exchange MRO Insyte is an MRO consultancy advising organizations in all aspects of materials management